Examples#

This page contains examples of using Raster Vision on open datasets. Unless otherwise stated, all commands should be run inside the Raster Vision Docker container. See Docker Images for info on how to do this.

How to Run an Example#

There is a common structure across all of the examples which represents a best practice for defining experiments. Running an example involves the following steps.

Acquire raw dataset.

(Optional) Get processed dataset which is derived from the raw dataset, either using a Jupyter notebook, or by downloading the processed dataset.

(Optional) Do an abbreviated test run of the experiment on a small subset of data locally.

Run full experiment on GPU.

Inspect output

(Optional) Make predictions on new imagery

Each of the examples has several arguments that can be set on the command line:

The input data for each experiment is divided into two directories: the raw data which is publicly distributed, and the processed data which is derived from the raw data. These two directories are set using the

raw_uriandprocessed_uriarguments.The output generated by the experiment is stored in the directory set by the

root_uriargument.The

raw_uri,processed_uri, androot_urican each be local or remote (on S3), and don’t need to agree on whether they are local or remote.Experiments have a

testargument which runs an abbreviated experiment for testing/debugging purposes.

In the next section, we describe in detail how to run one of the examples, SpaceNet Rio Chip Classification. For other examples, we only note example-specific details.

Chip Classification: SpaceNet Rio Buildings#

This example performs chip classification to detect buildings on the Rio AOI of the SpaceNet dataset.

Step 1: Acquire Raw Dataset#

The dataset is stored on AWS S3 at s3://spacenet-dataset. You will need an AWS account to access this dataset, but it will not be charged for accessing it. (To forward you AWS credentials into the container, use docker/run --aws).

Optional: to run this example with the data stored locally, first copy the data using something like the following inside the container.

aws s3 sync s3://spacenet-dataset/AOIs/AOI_1_Rio/ /opt/data/spacenet-dataset/AOIs/AOI_1_Rio/

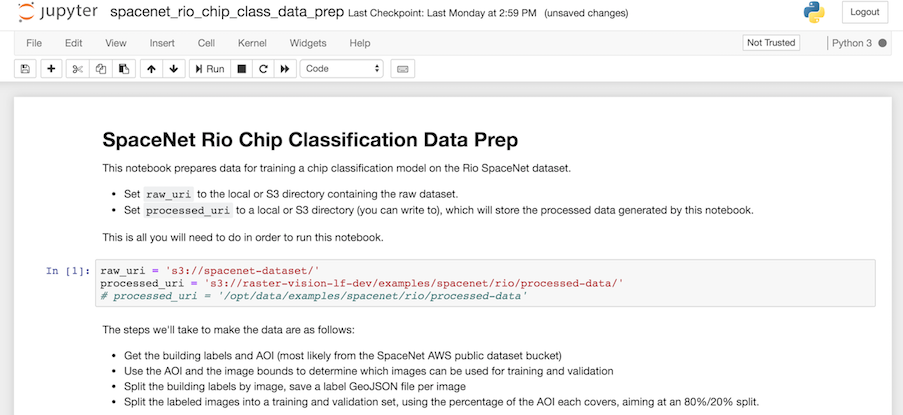

Step 2: Run the Jupyter Notebook#

You’ll need to do some data preprocessing, which we can do in the Jupyter notebook supplied.

docker/run --jupyter [--aws]

The --aws option is only needed if pulling data from S3. In Jupyter inside the browser, navigate to the rastervision/examples/chip_classification/spacenet_rio_data_prep.ipynb notebook. Set the URIs in the first cell and then run the rest of the notebook. Set the processed_uri to a local or S3 URI depending on where you want to run the experiment.

Step 3: Do a test run locally#

The experiment we want to run is in spacenet_rio.py. To run this, first get to the Docker console using:

docker/run [--aws] [--gpu] [--tensorboard]

The --aws option is only needed if running experiments on AWS or using data stored on S3. The --gpu option should only be used if running on a local GPU.

The --tensorboard option should be used if running locally and you would like to view Tensorboard. The test run can be executed using something like:

export RAW_URI="s3://spacenet-dataset/"

export PROCESSED_URI="/opt/data/examples/spacenet/rio/processed-data"

export ROOT_URI="/opt/data/examples/spacenet/rio/local-output"

rastervision run local rastervision.examples.chip_classification.spacenet_rio \

-a raw_uri $RAW_URI -a processed_uri $PROCESSED_URI -a root_uri $ROOT_URI \

-a test True --splits 2

The sample above assumes that the raw data is on S3, and the processed data and output are stored locally. The raw_uri directory is assumed to contain an AOIs/AOI_1_Rio subdirectory. This runs two parallel jobs for the chip and predict commands via --splits 2. See rastervision --help and rastervision run --help for more usage information.

Note that when running with -a test True, some crops of the test scenes are created and stored in processed_uri/crops/. All of the examples that use big image files use this trick to make the experiment run faster in test mode.

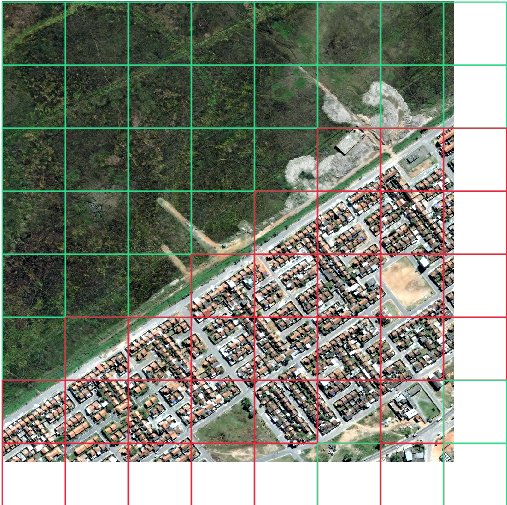

After running this, the main thing to check is that it didn’t crash, and that the visualization of training and validation chips look correct. These “debug chips” for each of the data splits can be found in $ROOT_URI/train/dataloaders/.

Step 4: Run full experiment#

To run the full experiment on GPUs using AWS Batch, use something like the following. Note that all the URIs are on S3 since remote instances will not have access to your local file system.

export RAW_URI="s3://spacenet-dataset/"

export PROCESSED_URI="s3://mybucket/examples/spacenet/rio/processed-data"

export ROOT_URI="s3://mybucket/examples/spacenet/rio/remote-output"

rastervision run batch rastervision.examples.chip_classification.spacenet_rio \

-a raw_uri $RAW_URI -a processed_uri $PROCESSED_URI -a root_uri $ROOT_URI \

-a test False --splits 8

For instructions on setting up AWS Batch resources and configuring Raster Vision to use them, see Running on AWS Batch. To monitor the training process using Tensorboard, visit <public dns>:6006 for the EC2 instance running the training job.

If you would like to run on a local GPU, replace batch with local, and use local URIs. To monitor the training process using Tensorboard, visit localhost:6006, assuming you used docker/run --tensorboard.

Step 5: Inspect results#

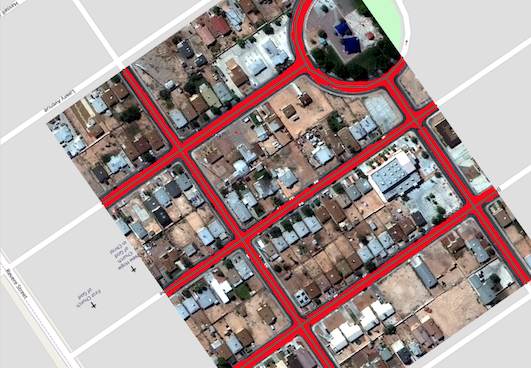

After everything completes, which should take about 1.5 hours if you’re running on AWS using a p3.2xlarge instance for training and 8 splits, you should be able to find the predictions over the validation scenes in $root_uri/predict/. The imagery and predictions are best viewed in QGIS, an example of which can be seen below. Cells that are predicted to contain buildings are red, and background are green.

The evaluation metrics can be found in $root_uri/eval/eval.json. This is an example of the scores from a run, which show an F1 score of 0.97 for detecting chips with buildings.

[

{

"precision": 0.9802512682554008,

"recall": 0.9865974924340684,

"f1": 0.9833968183611386,

"count_error": 0.0,

"gt_count": 2313.0,

"class_id": 0,

"class_name": "no_building"

},

{

"precision": 0.9789227645464389,

"recall": 0.9685147159479809,

"f1": 0.9736038795756798,

"count_error": 0.0,

"gt_count": 1461.0,

"class_id": 1,

"class_name": "building"

},

{

"precision": 0.9797369746892128,

"recall": 0.9795972443031267,

"f1": 0.9796057522335405,

"count_error": 0.0,

"gt_count": 3774.0,

"class_id": null,

"class_name": "average"

}

]

More evaluation details can be found here.

Semantic Segmentation: SpaceNet Vegas#

This experiment contains an example of doing semantic segmentation using the SpaceNet Vegas dataset which has labels in vector form. It allows for training a model to predict buildings or roads. Note that for buildings, polygon output in the form of GeoJSON files will be saved to the predict directory alongside the GeoTIFF files.

Arguments:

raw_urishould be set to the root of the SpaceNet data repository, which is ats3://spacenet-dataset, or a local copy of it. A copy only needs to contain theAOIs/AOI_2_Vegassubdirectory.targetcan bebuildingsorroadsprocessed_urishould not be set because there is no processed data in this example.

Semantic Segmentation: ISPRS Potsdam#

This experiment performs semantic segmentation on the ISPRS Potsdam dataset. The dataset consists of 5cm aerial imagery over Potsdam, Germany, segmented into six classes including building, tree, low vegetation, impervious, car, and clutter. For more info see our blog post.

Data:

The dataset can be downloaded from here. Access to files is password protected, but the password is provided on the same site. After downloading, unzip

4_Ortho_RGBIR.zipand5_Labels_for_participants.zipinto a directory, and then upload to S3 if desired.

Arguments:

raw_urishould contain4_Ortho_RGBIRand5_Labels_for_participantssubdirectories.processed_urishould be set to a directory which will be used to store test crops.

After training a model, the average F1 score was 0.89. More evaluation details can be found here.

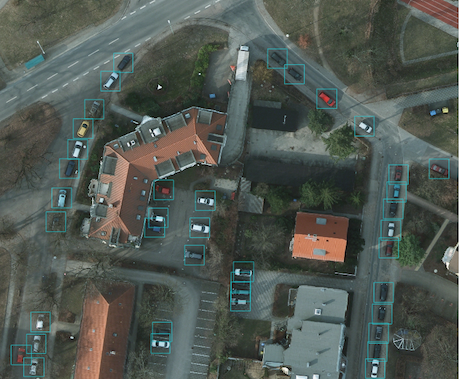

Object Detection: COWC Potsdam Cars#

This experiment performs object detection on cars with the Cars Overhead With Context dataset over Potsdam, Germany.

Data:

The dataset can be downloaded from here. After downloading, unzip

4_Ortho_RGBIR.zipinto a directory, and then upload to S3 if desired. (This example uses the same imagery as Semantic Segmentation: ISPRS Potsdam.)Download the processed labels and unzip. These files were generated from the COWC car detection dataset using some scripts. TODO: Get these scripts into runnable shape.

Arguments:

raw_urishould point to the imagery directory created above, and should contain the4_Ortho_RGBIRsubdirectory.processed_urishould point to the labels directory created above. It should contain thelabels/allsubdirectory.

After training a model, the car F1 score was 0.95. More evaluation details can be found here.

Object Detection: xView Vehicles#

This experiment performs object detection to find vehicles using the DIUx xView Detection Challenge dataset.

Data:

Sign up for an account for the DIUx xView Detection Challenge. Navigate to the downloads page and download the zipped training images and labels. Unzip both of these files and place their contents in a directory, and upload to S3 if desired.

Run the xview-data-prep.ipynb Jupyter notebook, pointing the

raw_urito the directory created above.

Arguments:

The

raw_urishould point to the directory created above, and contain a labels GeoJSON file namedxView_train.geojson, and a directory namedtrain_images.The

processed_urishould point to the processed data generated by the notebook.

After training a model, the vehicle F1 score was 0.61. More evaluation details can be found here.

Model Zoo#

Using the Model Zoo, you can download model bundles which contain pre-trained models and meta-data, and then run them on sample test images that the model wasn’t trained on.

rastervision predict <model bundle> <infile> <outfile>

Note that the input file is assumed to have the same channel order and statistics as the images the model was trained on. See rastervision predict --help to see options for manually overriding these. It shouldn’t take more than a minute on a CPU to make predictions for each sample. For some of the examples, there are also model files that can be used for fine-tuning on another dataset.

Disclaimer: These models are provided for testing and demonstration purposes and aren’t particularly accurate. As is usually the case for deep learning models, the accuracy drops greatly when used on input that is outside the training distribution. In other words, a model trained on one city probably won’t work well on another city (unless they are very similar) or at a different imagery resolution.

When unzipped, the model bundle contains a model.pth file which can be used for fine-tuning.

Note

The model bundles linked below are only compatible with Raster Vision version 0.30 or greater.

Dataset |

Task |

Model Type |

Model Bundle |

Sample Image |

|---|---|---|---|---|

SpaceNet Rio Buildings |

Chip Classification |

Resnet 50 |

||

SpaceNet Vegas Buildings |

Semantic Segmentation |

DeeplabV3 / Resnet50 |

||

SpaceNet Vegas Roads |

Semantic Segmentation |

DeeplabV3 / Resnet50 |

||

ISPRS Potsdam |

Semantic Segmentation |

Panoptic FPN / Resnet50 |

||

COWC Potsdam (Cars) |

Object Detection |

Faster-RCNN / Resnet18 |

||

xView (Vehicles) |

Object Detection |

Faster-RCNN / Resnet50 |